Learning to Shadow Hand-drawn Sketches

Qingyuan Zheng*, 1 Zhuoru Li*, 2 Adam W. Bargteil1

1University of Maryland, Baltimore County 2Project HAT

CVPR 2020 (Oral presentation)

Abstract

We present a fully automatic method to generate detailed and accurate artistic shadows from pairs of line drawing sketches and lighting directions. We also contribute a new dataset of one thousand examples of pairs of line drawings and shadows that are tagged with lighting directions. Remarkably, the generated shadows quickly communicate the underlying 3D structure of the sketched scene. Consequently, the shadows generated by our approach can be used directly or as an excellent starting point for artists. We demonstrate that the deep learning network we propose takes a hand-drawn sketch, builds a 3D model in latent space, and renders the resulting shadows. The generated shadows respect the hand-drawn lines and underlying 3D space and contain sophisticated and accurate details, such as self-shadowing effects. Moreover, the generated shadows contain artistic effects, such as rim lighting or halos appearing from back lighting, that would be achievable with traditional 3D rendering methods.

Dataset

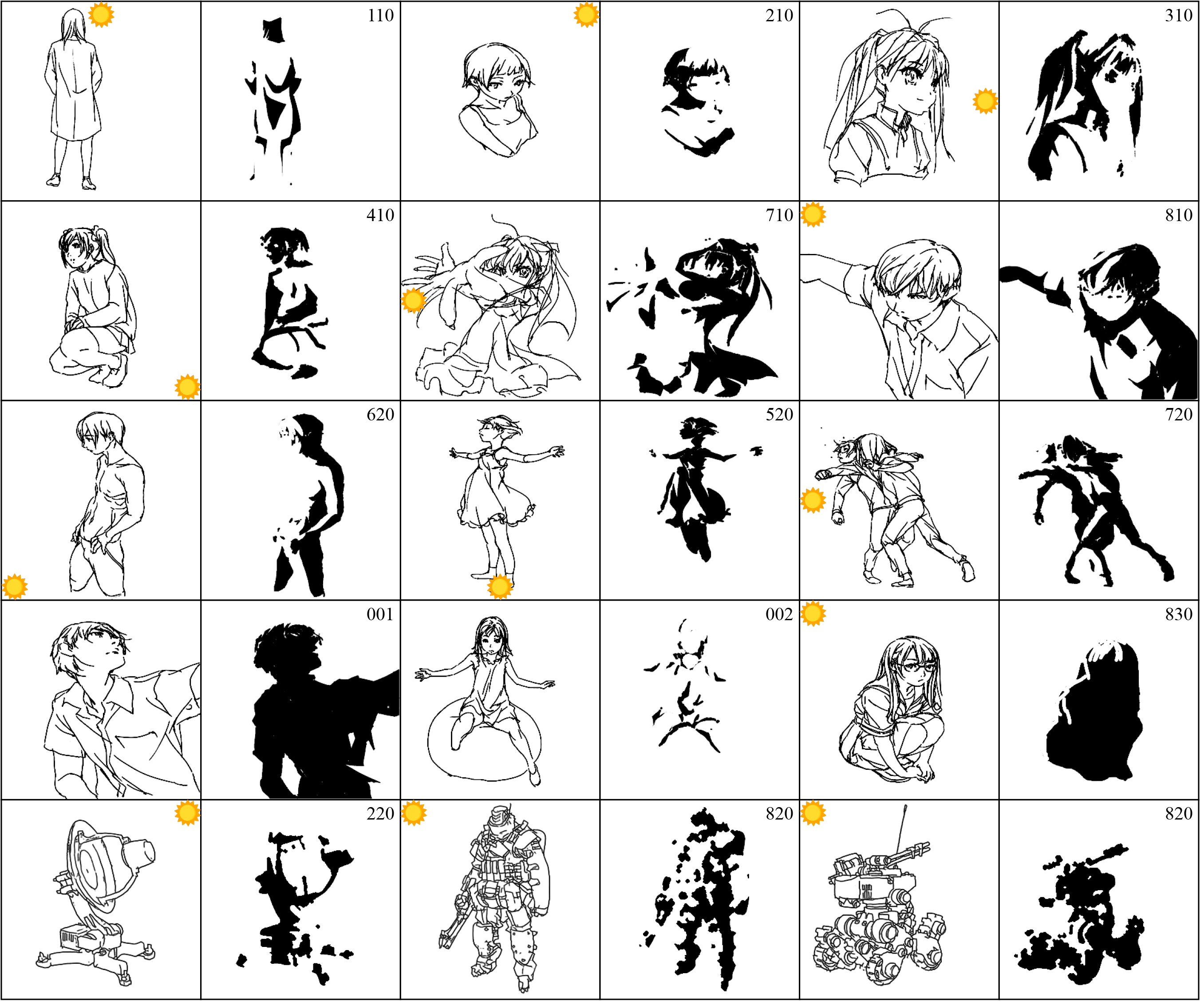

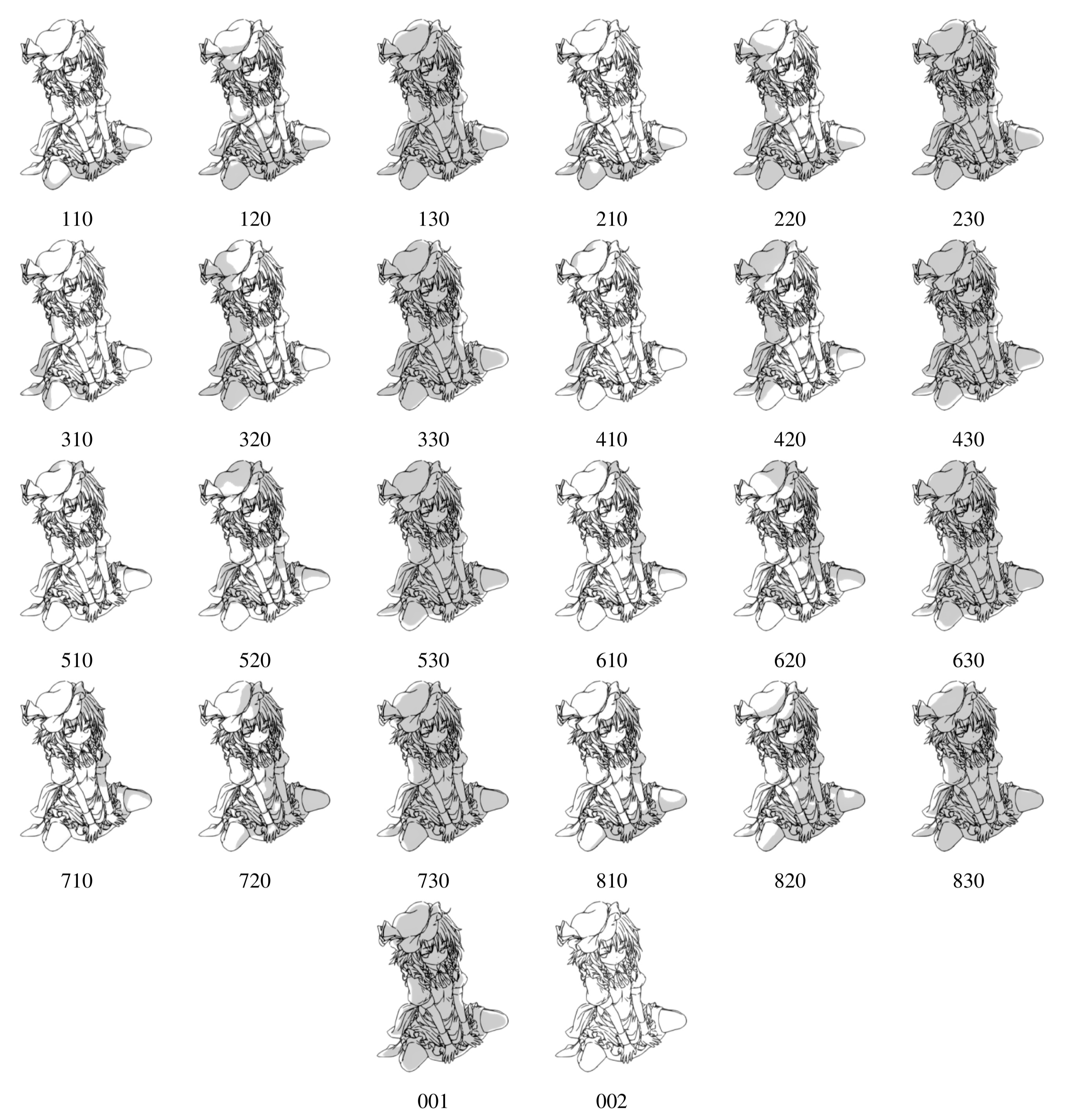

Samples from our dataset.

Our dataset comprises 1,160 sketch/shadow pairs and includes a variety of lighting directions and subjects. Specifically, 372 front-lighting, 506 side-lighting, 111 back-lighting, 85 center-back, and 86 center-front. With regard to subjects there are 867 single-person, 56 multi-person, 177 body-part, and 60 mecha.

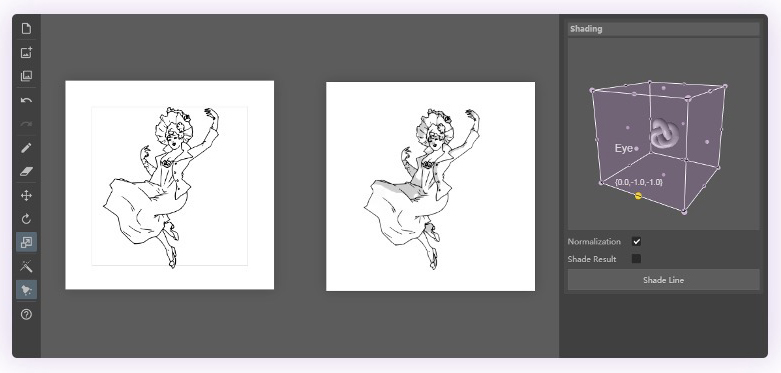

Demo

More interactive tools (drag, rotate, scale, drawing panel, brush, eraser etc.).

Video Demo

CVPR Presentation

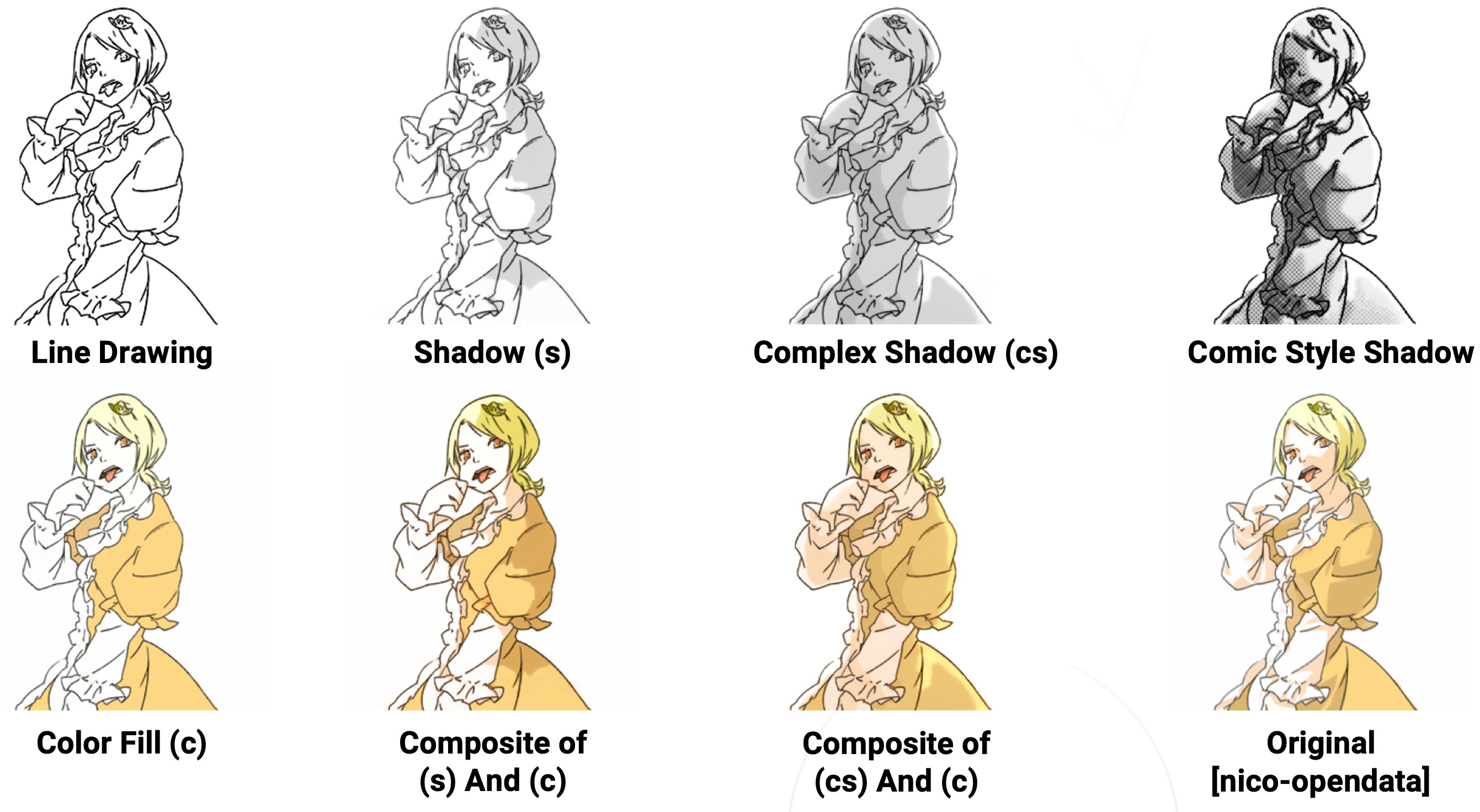

Artistic Control

Stylize our shadows with complex lighting directions, half-tone appearance, and compositing with colorized pictures.

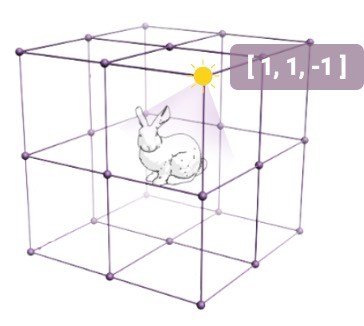

Virtual Lighting

Antoine Thomeguex

Kabuki Actor Segawa Kikunojo III as the Shirabyoshi Hisakata Disguised as Yamato Manzai

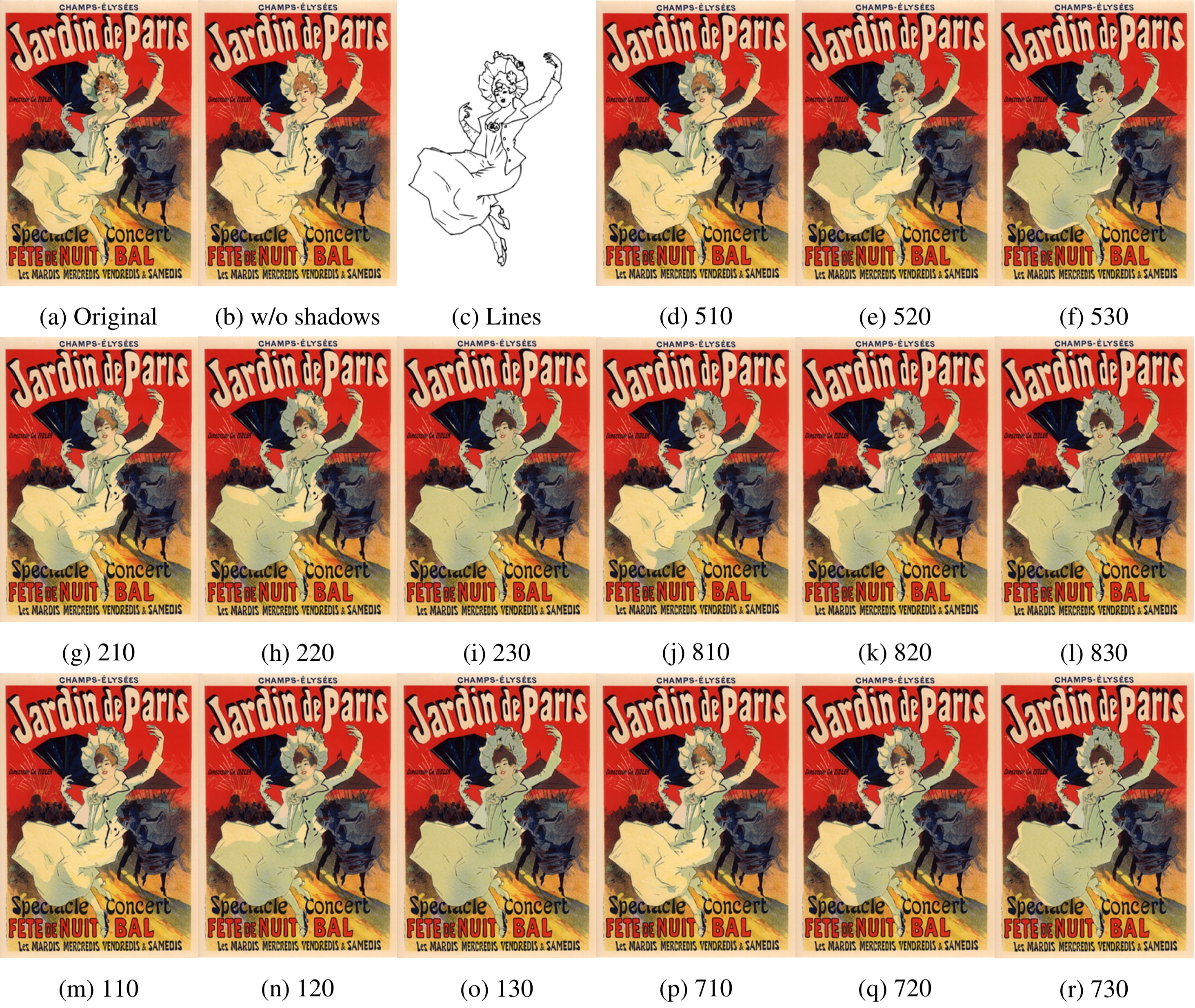

Jardin de Paris

More Results

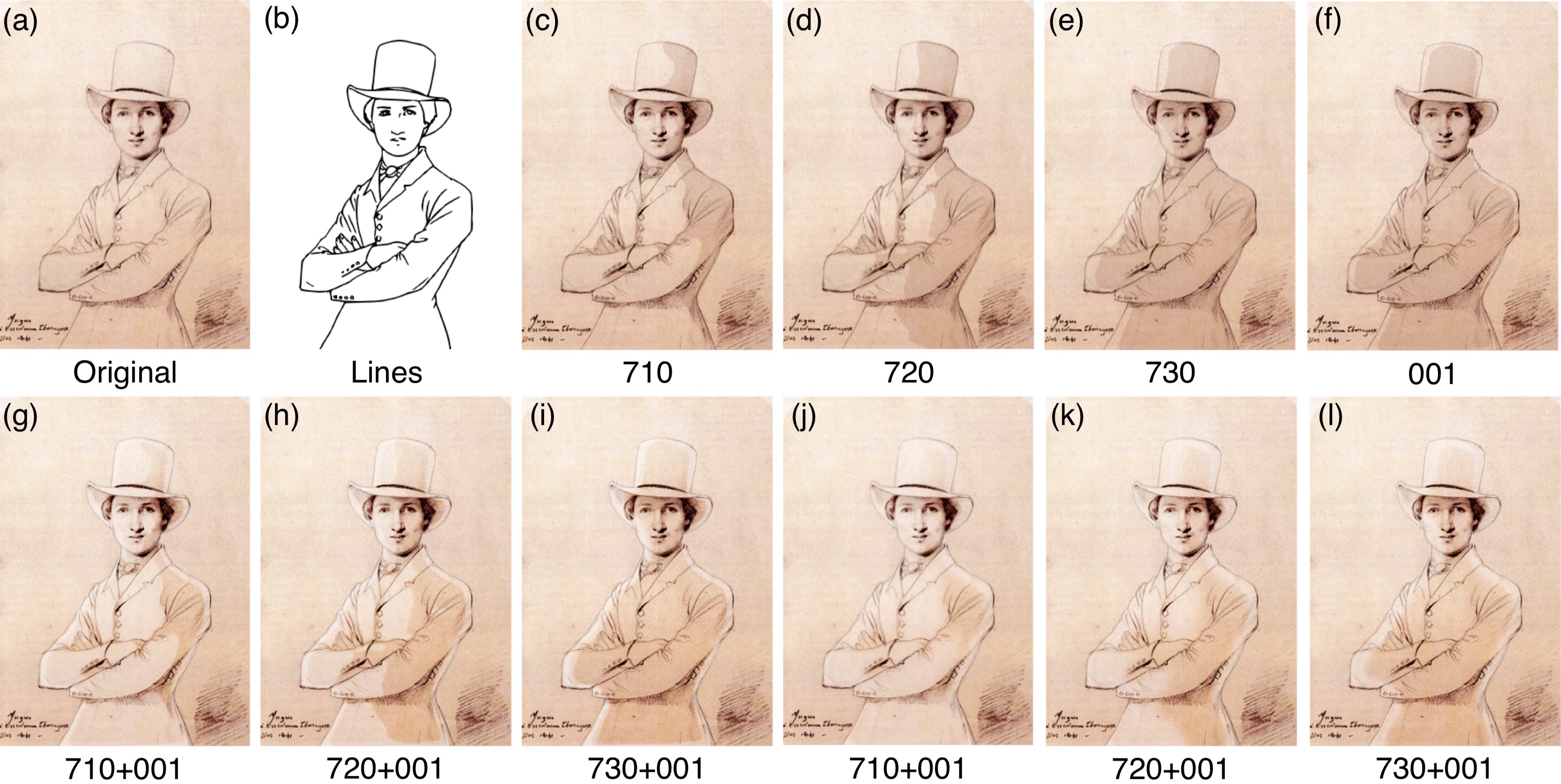

Coherent results learned from discrete tags.

Results from 26 discrete lighting directions.

Results in the wild.

Media & Tools

- Yosuke Shinya, ShadeSketch demo, interactive online demo written by Tensorflow.js. (2K retweets)

- Dong Cheol, ShadeSketch API

- 栗子, 如何拯救一只拖更的漫画家, technical article by 果壳. (34.5K reads)

- Seamless, 手描きキャラクターに深層学習で影, technical article by Seamless. (2.4K retweets)

Thank you very much for your time and interest in our work!